[Author Prev][Author Next][Thread Prev][Thread Next][Author Index][Thread Index]

[tor-dev] The limits of timing obfuscation in obfs4

Web page with graphics: https://people.torproject.org/~dcf/obfs4-timing/

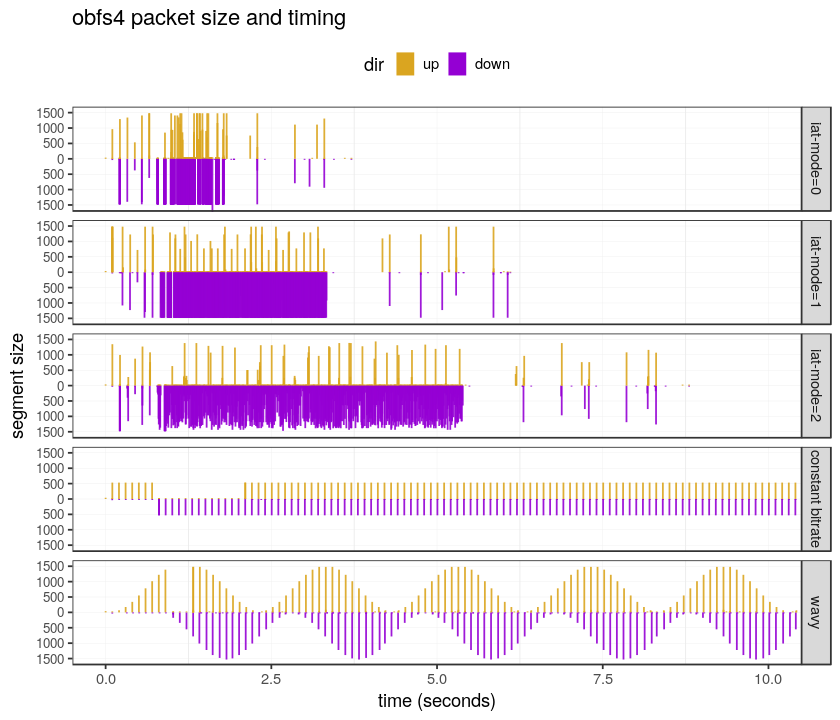

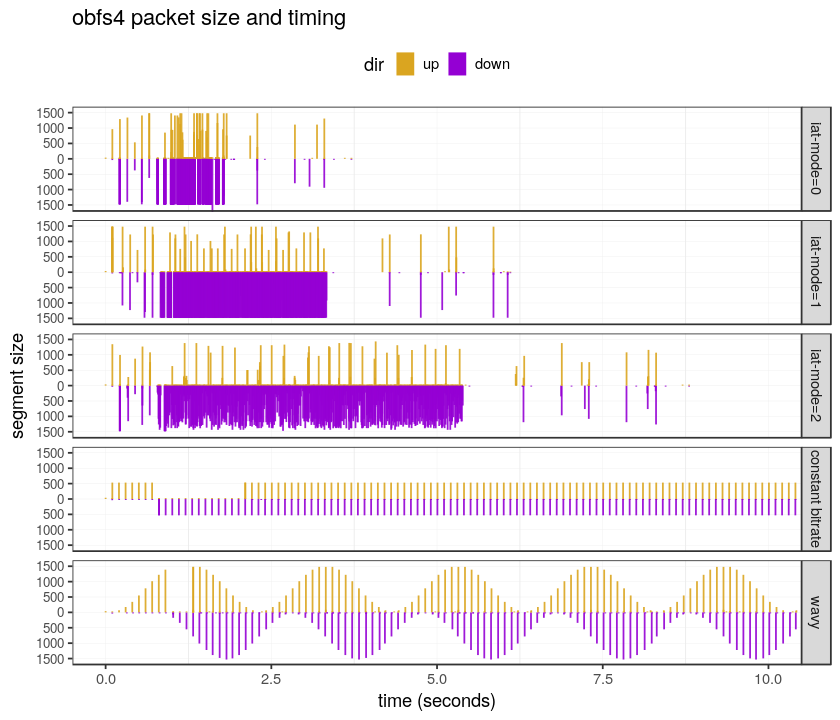

I was experimenting with ways to better obfuscate the timing signature

of Tor-in-obfs4, while staying compatible with the obfs4 specification.

I tried to make a constant bitrate mode that sends 500 bytes every

100 ms, in which the size and timing of obfs4 packets is independent of

the TLS packets underneath. It turns out that obfs4 mostly makes this

possible, with one exception: a gap in the client traffic while the

client waits for the server's handshake response, during which time the

client cannot send anything because it doesn't yet know the shared key.

Currently, the implementation of obfs4 sends data only when the

underlying process (i.e., tor) has something to send. When tor is quiet,

obfs4 is quiet; and when tor wants to send a packet, obfs4 sends a

packet without delay. obfs4 does add a random amount of padding to its

packets, which slightly alters the packet size signature but not the

timing signature. Even the modes that add short interpacket delays

(iat-mode=1 and iat-mode=2) only really have an effect on bulk

upload/download—they don't have much of an effect on the initial

handshake. See the first three rows of the attached graphic—the timing

of the first dozen or so packets hardly varies across the three modes.

This design, where obfs4 only sends packets when driven by tor, is an

implementation choice and isn't inherent in the protocol. obfs4's

framing structure [spec §5] allows for frames that contain only padding:

+------------+----------+--------+--------------+------------+------------+

| 2 bytes | 16 bytes | 1 byte | 2 bytes | (optional) | (optional) |

| Frame len. | Tag | Type | Payload len. | Payload | Padding |

+------------+----------+--------+--------------+------------+------------+

\_ Obfs. _/ \___________ NaCl secretbox (Poly1305/XSalsa20) ___________/

obfs4 could send padding frames (at whatever size and rate) during the

times when tor is quiet. The current implementation, in pseudocode,

works like this (transports/obfs4/obfs4.go obfs4Conn.Write):

on recv(data) from tor:

send(frame(data))

If it instead worked like this, then obfs4 could choose its own packet

scheduling, independent of tor's:

on recv(data) from tor:

enqueue data on send_buffer

func give_me_a_frame(): # never blocks

if send_buffer is not empty:

dequeue data from send buffer

return frame(data)

else:

return frame(padding)

in a separate thread:

buf = []

while true:

while length(buf) < 500:

buf = buf + give_me_a_frame()

chunk = buf[:500]

buf = buf[500:]

send(chunk)

sleep(100 ms)

The key idea is that give_me_a_frame never blocks: if it doesn't have

any application data immediately available, it returns a padding frame

instead. The independent sending thread calls give_me_a_frame as often

as necessary and obeys its own schedule. Note also that the boundaries

of chunks sent by the sending thread are independent of frame

boundaries. Yawning points me to this code in basket2 that uses the same

idea of independently sending padding according to a schedule:

https://git.schwanenlied.me/yawning/basket2/src/72f203e133c90a26f68f0cd33b0ce90ec6a6b76c/padding_tamaraw.go

I attach a proof-of-concept patch for obfs4proxy that makes it operate

in a constant bitrate mode. You can see its timing signature in the

fourth row of the attached graphic. With this proof of concept, I'm not

trying to claim that a constant bitrate is good for performance or for

censorship resistance. It's just an example of shaping obfs4's traffic

pattern in a way that is independent of the underlying stream. The fifth

row of the graphic shows a more complicated sine wave pattern—it could

be anything.

You will however notice an oddity in the fourth and fifth rows of the

graphic, a gap in the stream of client packets. This is what I alluded

to in the first paragraph, where after the client has sent its client

handshake but before it has received the server handshake, the client

doesn't yet know the shared key. Because every frame sent after the

handshake needs to begin with a tag that depends on the shared key, the

client cannot send anything, not even padding, until it receives the

server's reponse. During this time, the give_me_a_frame function has no

choice but to block.

k = ephemeral key

p = random amount of padding

m = MAC signifying end of padding

a = authentication tag

d = data frames

The obfs4 client handshake looks like this [spec §4]:

k | p | m

The server doesn't reply until it has verified the client's MAC, which

proves that the client knows the bridge's out-of-band secret (this is

how obfs4 resists active probing). The server handshake reply is

similar, with the addition of an authentication tag:

k | a | p | m (different values than in the client handshake)

This means that no matter how you schedule packet sending, the traffic

will always have this form, with a ...... gap where the client is

waiting for the server's handshake to come back:

client kpppppm......ddddddddddddddddddddddddddd

server .......kapppmddddddddddddddddddddddddddd

There isn't a way to completely remove the client gap in obfs4 and still

follow the protocol. A future protocol could perhaps remove it (I say

perhaps because I haven't thought about the crypto implications) by

changing the client's handshake to have a second round of padding, which

it could send while waiting for the server to reply:

k | p | m1 | p | m2

[spec] https://gitweb.torproject.org/pluggable-transports/obfs4.git/tree/doc/obfs4-spec.txt?id=obfs4proxy-0.0.7

From 3699bbda1633b17eb5fae9ced6158df42fe1384b Mon Sep 17 00:00:00 2001

From: David Fifield <david@xxxxxxxxxxxxxxx>

Date: Sat, 10 Jun 2017 17:26:13 -0700

Subject: [PATCH] Queue writes through an independent write scheduler.

Send padding packets where there is no application data waiting.

The one place this doesn't work is in the client, after sending the

client handshake and before receiving the Y' and AUTH portions of the

server reply. During this time the client doesn't yet know the session

key and so cannot send anything. This version waits even longer, until

the entire server handshake has been received (including P_S, M_S, and

MAC_S).

This is set up to do sends in a fixed size of 500 bytes at a fixed rate

of 10 sends per second. As a hack, this rounds the client handshake size

to a multiple of 500 bytes, so that it doesn't stall waiting for the

final full chunk to be available to send.

---

transports/obfs4/handshake_ntor.go | 8 ++-

transports/obfs4/obfs4.go | 122 ++++++++++++++++++++++++++++++++-----

2 files changed, 114 insertions(+), 16 deletions(-)

diff --git a/transports/obfs4/handshake_ntor.go b/transports/obfs4/handshake_ntor.go

index ee1bca8..fb5935a 100644

--- a/transports/obfs4/handshake_ntor.go

+++ b/transports/obfs4/handshake_ntor.go

@@ -127,7 +127,13 @@ func newClientHandshake(nodeID *ntor.NodeID, serverIdentity *ntor.PublicKey, ses

hs.keypair = sessionKey

hs.nodeID = nodeID

hs.serverIdentity = serverIdentity

- hs.padLen = csrand.IntRange(clientMinPadLength, clientMaxPadLength)

+ padLen := csrand.IntRange(clientMinPadLength, clientMaxPadLength)

+ // Hack: round total handshake size to a multiple of 500 for CBR mode.

+ padLen = padLen - (padLen + clientMinHandshakeLength) % 500

+ if padLen < 0 {

+ padLen += 500

+ }

+ hs.padLen = padLen

hs.mac = hmac.New(sha256.New, append(hs.serverIdentity.Bytes()[:], hs.nodeID.Bytes()[:]...))

return hs

diff --git a/transports/obfs4/obfs4.go b/transports/obfs4/obfs4.go

index 304097e..7210210 100644

--- a/transports/obfs4/obfs4.go

+++ b/transports/obfs4/obfs4.go

@@ -34,6 +34,7 @@ import (

"crypto/sha256"

"flag"

"fmt"

+ "io"

"math/rand"

"net"

"strconv"

@@ -265,7 +266,13 @@ func (sf *obfs4ServerFactory) WrapConn(conn net.Conn) (net.Conn, error) {

iatDist = probdist.New(sf.iatSeed, 0, maxIATDelay, biasedDist)

}

- c := &obfs4Conn{conn, true, lenDist, iatDist, sf.iatMode, bytes.NewBuffer(nil), bytes.NewBuffer(nil), make([]byte, consumeReadSize), nil, nil}

+ c := &obfs4Conn{conn, true, lenDist, iatDist, sf.iatMode, bytes.NewBuffer(nil), bytes.NewBuffer(nil), make([]byte, consumeReadSize), make(chan []byte), nil, nil, make(chan bool)}

+

+ ws := newWriteScheduler(c)

+ go func() {

+ ws.run()

+ c.Close()

+ }()

startTime := time.Now()

@@ -290,8 +297,11 @@ type obfs4Conn struct {

receiveDecodedBuffer *bytes.Buffer

readBuffer []byte

- encoder *framing.Encoder

- decoder *framing.Decoder

+ writeQueue chan []byte

+

+ encoder *framing.Encoder

+ decoder *framing.Decoder

+ codersReadyChan chan bool

}

func newObfs4ClientConn(conn net.Conn, args *obfs4ClientArgs) (c *obfs4Conn, err error) {

@@ -312,7 +322,13 @@ func newObfs4ClientConn(conn net.Conn, args *obfs4ClientArgs) (c *obfs4Conn, err

}

// Allocate the client structure.

- c = &obfs4Conn{conn, false, lenDist, iatDist, args.iatMode, bytes.NewBuffer(nil), bytes.NewBuffer(nil), make([]byte, consumeReadSize), nil, nil}

+ c = &obfs4Conn{conn, false, lenDist, iatDist, args.iatMode, bytes.NewBuffer(nil), bytes.NewBuffer(nil), make([]byte, consumeReadSize), make(chan []byte), nil, nil, make(chan bool)}

+

+ ws := newWriteScheduler(c)

+ go func() {

+ ws.run()

+ c.Close()

+ }()

// Start the handshake timeout.

deadline := time.Now().Add(clientHandshakeTimeout)

@@ -343,9 +359,7 @@ func (conn *obfs4Conn) clientHandshake(nodeID *ntor.NodeID, peerIdentityKey *nto

if err != nil {

return err

}

- if _, err = conn.Conn.Write(blob); err != nil {

- return err

- }

+ conn.writeQueue <- blob

// Consume the server handshake.

var hsBuf [maxHandshakeLength]byte

@@ -370,6 +384,8 @@ func (conn *obfs4Conn) clientHandshake(nodeID *ntor.NodeID, peerIdentityKey *nto

okm := ntor.Kdf(seed, framing.KeyLength*2)

conn.encoder = framing.NewEncoder(okm[:framing.KeyLength])

conn.decoder = framing.NewDecoder(okm[framing.KeyLength:])

+ // Signal to the gimmeData function that our encoder is ready.

+ close(conn.codersReadyChan)

return nil

}

@@ -440,9 +456,11 @@ func (conn *obfs4Conn) serverHandshake(sf *obfs4ServerFactory, sessionKey *ntor.

if err := conn.makePacket(&frameBuf, packetTypePrngSeed, sf.lenSeed.Bytes()[:], 0); err != nil {

return err

}

- if _, err = conn.Conn.Write(frameBuf.Bytes()); err != nil {

- return err

- }

+ conn.writeQueue <- frameBuf.Bytes()

+

+ // Signal to the gimmeData function that our encoder is ready.

+ // Need to do this *after* writing the handshake to the writeQueue.

+ close(conn.codersReadyChan)

return nil

}

@@ -557,14 +575,13 @@ func (conn *obfs4Conn) Write(b []byte) (n int, err error) {

iatDelta := time.Duration(conn.iatDist.Sample() * 100)

// Write then sleep.

- _, err = conn.Conn.Write(iatFrame[:iatWrLen])

- if err != nil {

- return 0, err

- }

+ tmpBuf := make([]byte, iatWrLen)

+ copy(tmpBuf, iatFrame[:iatWrLen])

+ conn.writeQueue <- tmpBuf

time.Sleep(iatDelta * time.Microsecond)

}

} else {

- _, err = conn.Conn.Write(frameBuf.Bytes())

+ conn.writeQueue <- frameBuf.Bytes()

}

return

@@ -637,6 +654,81 @@ func (conn *obfs4Conn) padBurst(burst *bytes.Buffer, toPadTo int) (err error) {

return

}

+func (conn *obfs4Conn) gimmeData() ([]byte, error) {

+ // Poll the writeQueue.

+ select {

+ case chunk := <-conn.writeQueue:

+ return chunk, nil

+ default:

+ }

+ // Otherwise wait until the session key is ready. Keep checking the

+ // writeQueue in case something come in meanwhile.

+ select {

+ case chunk := <-conn.writeQueue:

+ return chunk, nil

+ case <-conn.codersReadyChan:

+ }

+ // No actual data to send, but we have a session key, so send a padding

+ // frame. The exact size doesn't matter much.

+ var frameBuf bytes.Buffer

+ err := conn.makePacket(&frameBuf, packetTypePayload, nil, 1024)

+ if err != nil {

+ return nil, err

+ }

+ return frameBuf.Bytes(), nil

+}

+

+type writeScheduler struct {

+ obfs4 *obfs4Conn

+ buf bytes.Buffer

+}

+

+func newWriteScheduler(obfs4 *obfs4Conn) *writeScheduler {

+ var ws writeScheduler

+ ws.obfs4 = obfs4

+ return &ws

+}

+

+func (ws *writeScheduler) Read(b []byte) (n int, err error) {

+ for {

+ if ws.buf.Len() > 0 {

+ return ws.buf.Read(b)

+ }

+ ws.buf.Reset()

+ data, err := ws.obfs4.gimmeData()

+ if err != nil {

+ return 0, err

+ }

+ ws.buf.Write(data)

+ }

+}

+

+func (ws *writeScheduler) run() error {

+ var buf [500]byte

+ sched := time.Now()

+ for {

+ n, err := io.ReadFull(ws, buf[:])

+ _, err2 := ws.obfs4.Conn.Write(buf[:n])

+ if err2 != nil {

+ return err2

+ }

+ if err == io.EOF || err == io.ErrUnexpectedEOF {

+ break

+ } else if err != nil {

+ return err

+ }

+

+ now := time.Now()

+ sched = sched.Add(100 * time.Millisecond)

+ if sched.Before(now) {

+ sched = now

+ } else {

+ time.Sleep(sched.Sub(now))

+ }

+ }

+ return nil

+}

+

func init() {

flag.BoolVar(&biasedDist, biasCmdArg, false, "Enable obfs4 using ScrambleSuit style table generation")

}

--

2.11.0

_______________________________________________

tor-dev mailing list

tor-dev@xxxxxxxxxxxxxxxxxxxx

https://lists.torproject.org/cgi-bin/mailman/listinfo/tor-dev